enabling businesses of all sizes to build their own high performance web-scale storage for their private cloud. Inspired by the highly scalable, commodity-hardware based approaches of public clouds, the company is developing the first flash-tuned scale-out storage architecture designed for private clouds that delivers unparalleled performance and simplified management at public cloud capacity pricing.

So, what is Coho Data?

Coho Data’s is a software stack that runs, and ships on commodity hardware.

Coho Data targets mid to large enterprises, ISPs, application service providers specifically while open to all comers. Currently VMware-only, Coho Data uses NFS 3 as it’s underpinnings as that is where VMware sales currently are. It tries to resolve problematic VMs, SQL Server, Oracle DB.

"Network pain becomes storage pain a few years later"

says Coho Data's Andy Warfield

Right now, remote asynchronous replication now available in Coho Data’s v2.0 release.

Debuting here at Storage Field Day 6 is…tada…Coho Data v. 2.0

V2.0 starts out with a very cool UI.

We are walked through a demo by Coho Data’s CTO and co-founder, Andy Warfield.

“..all storage arrays will be hybrid…but they won’t have disks."

Andy Warfield

Utilizing RESTful interfaces and APIs, and leveraging JSON, v2.0 is going to prove quite important in console management and process workflows in the enterprise. In the API walkthrough , Andy showed us the dev console, which had JSON and other graphs integrated into it. We learned how v2.0 uses NVMe data paths submission/completion Qs optimization of multi-cores and NICs. The API API also allows admins to build best-practices for special use cases, or workloads, and especially apps. It also allows for users to perform Big Data analysis and optimization.

With Coho Data v2.0, admins can declutter their NFS namespace trees for workload contention across multicores. Preparing TCP packet headers, and transferring them to other nodes is also a snap.

Andy also laid out a 3rd-party trust model for storage.

“Preparing packet header like an envelope' for other micro arrays, only possible if correctly architected from start. says Andy Warfield

As I see it

Their vision is coherent, and very mindful of the meagre resources their startup has.

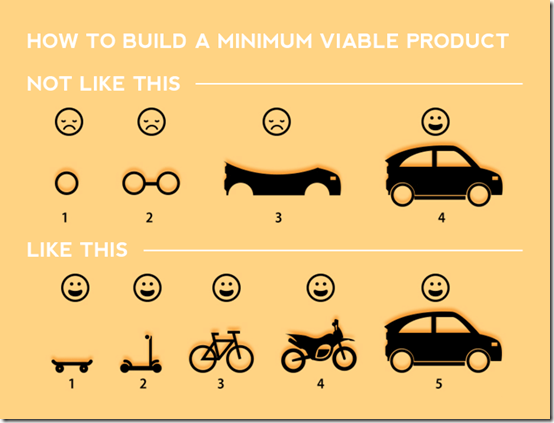

While Andy said quite a few notable quotes, one of the things I best remember from this visit to the Coho offices is the graphic above.

Thank you to all at Coho Data for their hospitality, and for this excellent LEGO minifig.

© 2002 – 2014, John Obeto for Blackground Media Unlimited

Follow @johnobeto